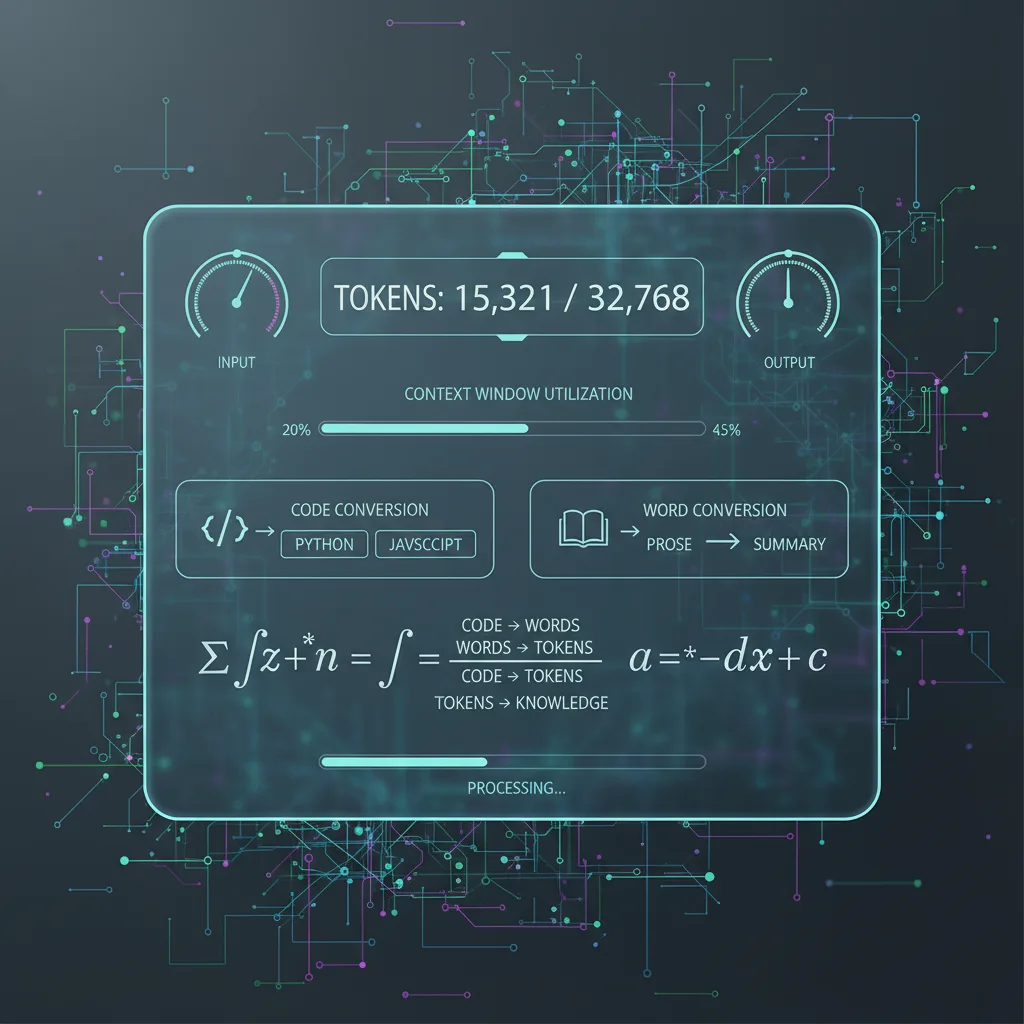

I’m not sure if you’re like me. You spend hours crafting a perfect multipage prompt only to have your favorite A.I. chat disregard half the instructions you fed it, in the past this could have been because of your prompt exceeded the context window (token count/memory), or your prompting needed better refinement.

So, how do I determine the number of tokens being used? Below is an example of how many tokens different things take:

30,000 tokens is roughly:

- 22,500 words (assuming 1 token ≈ 0.75 words)

- 120,000 characters (assuming 1 token ≈ 4 characters)

- ~75 single-spaced pages (using standard 12pt font)

- About 2–3 hours of spoken audio transcript

- A short ebook, a full research paper, or a large codebase

*Images only use tokens if the model is analyzing or describing them—the more content extracted or described, the more tokens are used. Pure image output (files)

| Task | Do tokens count? | Typical token usage |

|---|---|---|

| Writing prompt (image creation) | Yes | Prompt only (5–100) |

| Returning image output | No | 0 (unless includes text) |

| Analyzing/describing image input/result | Yes | 50–2,000+ |

| Uploading images (no analysis) | No | 0 |

| File/image OCR or content extraction | Yes | All detected text |

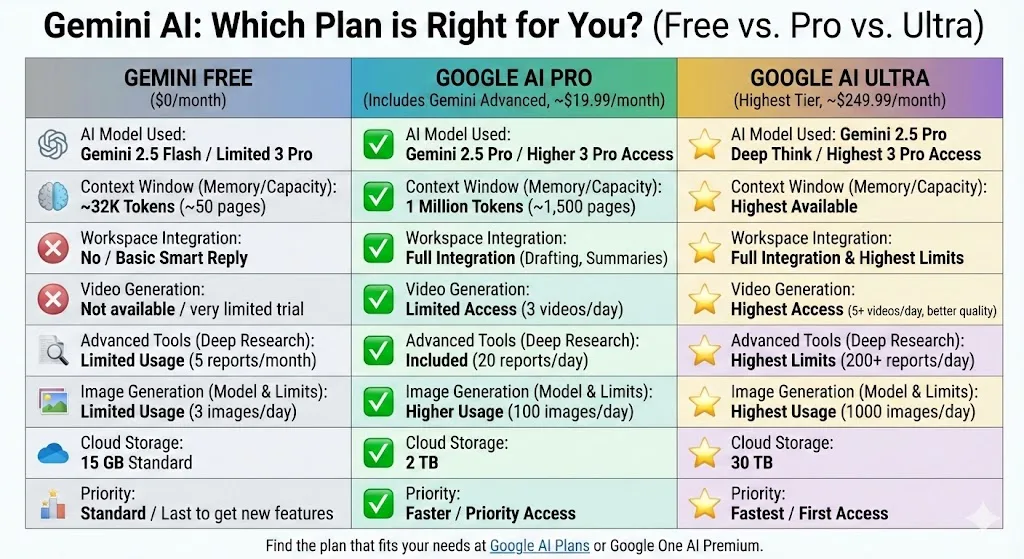

Below are custom limits for the top three AI chat applications:

| Provider | Free Tier | Paid/Pro/Team | Max Token Limit (2025) |

|---|---|---|---|

| ChatGPT (OpenAI) | 8,000 | 32k,128k,196k | Up to 196,000 |

| Claude (Anthropic) | 100k–200k | 200k | Up to 200,000 |

| Gemini (Google) | 1M | 1M+ | Up to 1,000,000+ |

Nowadays, most of this won’t be a barrier to your prompting, especially if you’re using Claude or Gemini. If you’re using the free tier, this may significantly limit your token count, especially in ChatGPT.

If this article was helpful, please share. Sharing is caring.